宝塔 1 url=https://download.bt.cn/install/install_lts.sh;if [ -f /usr/bin/curl ];then curl -sSO $url;else wget -O install_lts.sh $url;fi;bash install_lts.sh ed8484bec

1 wget -O install.sh https://download.bt.cn/install/install_lts.sh && sudo bash install.sh ed8484bec

1 rm -f /www/server/panel/data/admin_path.pl

1Panel 1 curl -sSL https://resource.fit2cloud.com/1panel/package/quick_start.sh -o quick_start.sh && sh quick_start.sh

1 curl -sSL https://resource.fit2cloud.com/1panel/package/quick_start.sh -o quick_start.sh && sudo bash quick_start.sh

SSH连接 虚拟机网络配置 1 sudo vi /etc/sysconfig/network-scripts/ifcfg-eth0

1 sudo systemctl restart network

解压文件 1 tar zxvf xxx.tgz -C /home

1 tar -zxvf xxx.tar.gz -C /home

Docker安装 1 2 3 4 5 6 7 8 9 yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine \ docker-selinux

因为使用的 CentOS 7 仓库已经被归档,当前的镜像地址无法找到所需的文件。CentOS 7 的官方支持已经结束,部分仓库已被移至归档库。这导致了你的 yum 命令无法找到所需的元数据文件。CentOS 7 的官方仓库在 2024 年 6 月 30 日之后已经停止维护。因此,使用最新的 CentOS 7 官方仓库可能会遇到问题。

解决方法

1 cp CentOS-Base.repo CentOS-Base.repo.backup

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 # CentOS-Base.repo # # The mirror system uses the connecting IP address of the client and the # update status of each mirror to pick mirrors that are updated to and # geographically close to the client. You should use this for CentOS updates # unless you are manually picking other mirrors. # # If the mirrorlist= does not work for you, as a fall back you can try the # remarked out baseurl= line instead. # # [base] name=CentOS-$releasever - Base #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=os&infra=$infra #baseurl=http://mirror.centos.org/centos/$releasever/os/$basearch/ #baseurl=http://vault.centos.org/7.9.2009/x86_64/os/ baseurl=http://vault.centos.org/7.9.2009/os/$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7 #released updates [updates] name=CentOS-$releasever - Updates #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=updates&infra=$infra #baseurl=http://mirror.centos.org/centos/$releasever/updates/$basearch/ #baseurl=http://vault.centos.org/7.9.2009/x86_64/os/ baseurl=http://vault.centos.org/7.9.2009/updates/$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7 #additional packages that may be useful [extras] name=CentOS-$releasever - Extras #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=extras&infra=$infra #$baseurl=http://mirror.centos.org/centos/$releasever/extras/$basearch/ #baseurl=http://vault.centos.org/7.9.2009/x86_64/os/ baseurl=http://vault.centos.org/7.9.2009/extras/$basearch/ gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7 #additional packages that extend functionality of existing packages [centosplus] name=CentOS-$releasever - Plus #mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=centosplus&infra=$infra #baseurl=http://mirror.centos.org/centos/$releasever/centosplus/$basearch/ #baseurl=http://vault.centos.org/7.9.2009/x86_64/os/ baseurl=http://vault.centos.org/7.9.2009/centosplus/$basearch/ gpgcheck=1 enabled=0 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

1 sudo yum install -y yum-utils device-mapper-persistent-data lvm2

1 sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

1 sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

1 yum install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

配置镜像加速地址

1 2 3 4 5 tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://xxxx.mirror.aliyuncs.com"] } EOF

1 systemctl restart docker

Docker命令集 1 systemctl restart docker

1 docker ps -q | xargs docker stop

1 docker run --restart=always 容器id 或 容器名称

1 docker update --restart=always 容器id 或 容器名称

1 docker update --restart=no 容器id 或 容器名称

1 docker update --restart=always $(docker ps -aq)

1 docker update --restart=always mysql mysql5 redis nacos minio 1Panel-mysql 1Panel-halo 1Panel-frpc

1 docker update --restart=always 1Panel-frpc

1 docker update --restart=no $(docker ps -aq)

修改DNS 1 2 3 4 # Generated by NetworkManager nameserver 8.8.8.8 nameserver 223.5.5.5

解除端口占用 1 netstat -aon|findstr 端口号

网络 Java项目往往需要访问其它各种中间件,例如MySQL、Redis等。现在,我们的容器之间能否互相访问呢?我们来测试一下

首先,我们查看下MySQL容器的详细信息,重点关注其中的网络IP地址:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 # 1.用基本命令,寻找Networks.bridge.IPAddress属性 docker inspect mysql # 也可以使用format过滤结果 docker inspect --format='{{range .NetworkSettings.Networks}}{{println .IPAddress}}{{end}}' mysql # 得到IP地址如下: 172.17.0.2 # 2.然后通过命令进入dd容器 docker exec -it dd bash # 3.在容器内,通过ping命令测试网络 ping 172.17.0.2 # 结果 PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data. 64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.053 ms 64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.059 ms 64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.058 ms

发现可以互联,没有问题。

但是,容器的网络IP其实是一个虚拟的IP,其值并不固定与某一个容器绑定,如果我们在开发时写死某个IP,而在部署时很可能MySQL容器的IP会发生变化,连接会失败。

所以,我们必须借助于docker的网络功能来解决这个问题,官方文档:

https://docs.docker.com/engine/reference/commandline/network/

常见命令有:

教学演示:自定义网络

创建网络 1 docker network create mynet

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 # 结果: NETWORK ID NAME DRIVER SCOPE 639bc44d0a87 bridge bridge local 403f16ec62a2 hmall bridge local 0dc0f72a0fbb host host local cd8d3e8df47b none null local # 其中,除了hmall以外,其它都是默认的网络 # 3.让dd和mysql都加入该网络,注意,在加入网络时可以通过--alias给容器起别名 # 这样该网络内的其它容器可以用别名互相访问! # 3.1.mysql容器,指定别名为db,另外每一个容器都有一个别名是容器名 docker network connect hmall mysql --alias db # 3.2.db容器,也就是我们的java项目 docker network connect hmall dd # 4.进入dd容器,尝试利用别名访问db # 4.1.进入容器 docker exec -it dd bash # 4.2.用db别名访问 ping db # 结果 PING db (172.18.0.2) 56(84) bytes of data. 64 bytes from mysql.hmall (172.18.0.2): icmp_seq=1 ttl=64 time=0.070 ms 64 bytes from mysql.hmall (172.18.0.2): icmp_seq=2 ttl=64 time=0.056 ms # 4.3.用容器名访问 ping mysql # 结果: PING mysql (172.18.0.2) 56(84) bytes of data. 64 bytes from mysql.hmall (172.18.0.2): icmp_seq=1 ttl=64 time=0.044 ms 64 bytes from mysql.hmall (172.18.0.2): icmp_seq=2 ttl=64 time=0.054 ms

OK,现在无需记住IP地址也可以实现容器互联了。

总结 :

在自定义网络中,可以给容器起多个别名,默认的别名是容器名本身

在同一个自定义网络中的容器,可以通过别名互相访问

1 rpm -ivh /opt/wget-1.14-10.el7.x86_64.rpm

1 sudo yum install -y wget

1 sudo apt-get install wget

安装Python、pip 1 sudo yum install epel-releasesudo yum install python-pip

1 sudo yum -y install epel-release

1 sudo yum -y install python-pip

1 sudo apt-get install python3-pip

安装升级Python、pip 下载链接

1 sudo mkdir /usr/local/python3

1 wget --no-check-certificate https://www.python.org/ftp/python/3.8.2/Python-3.8.2.tgz

1 tar xzvf Python-3.8.2.tgz

1 sudo ./configure --prefix=/usr/local/python3

1 sudo ln -s /usr/local/python3/bin/python3 /usr/bin/python

1 sudo apt install python3

1 python -m pip install --upgrade pip

1 export PATH=/usr/local/python3/bin/:$PATH

安装speedtest-cli 网络测速 1 sudo pip install speedtest-cli

1 sudo apt install speedtest-cli

fps客户端配置 1 2 3 4 5 6 [[proxies]] name="name" type ="tcp" localIp = "localhost" localPort = 11 remotePort = 11

启动文件目录格式的elasticsearch 1 cd /usr/local/soft/elasticsearch-7.17.16

java应用部署 打包jar包

1 2 3 4 5 6 7 8 9 FROM openjdk:11.0 -jre-busterENV TZ=Asia/ShanghaiRUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone COPY backend.jar /app.jar ENTRYPOINT ["java" , "-jar" , "/app.jar" ]

把jar包和Dockerfile文件一起放到服务器的某个文件中

进入那个文件位置

1 docker build -t blink_backend .

1 docker run -d --name blink_backend -p 7070:7070 blink_backend

1 docker logs -f blink_backend

MySQL容器 1 2 3 4 5 6 7 8 9 10 11 12 docker run -d \ --name mysql \ -p 3306:3306 \ -e TZ=Asia/Shanghai \ -e MYSQL_ROOT_PASSWORD=123456 \ -v ./mysql/data:/var/lib/mysql \ -v ./mysql/conf:/etc/mysql/conf.d \ -v ./mysql/init:/docker-entrypoint-initdb.d \ --restart=always \ --network mynet \ --ip 172.20.0.2 \ mysql --lower_case_table_names=1

1 2 3 4 5 6 7 8 9 10 11 12 docker run -d \ --name mysql5 \ -p 3305:3306 \ -e TZ=Asia/Shanghai \ -e MYSQL_ROOT_PASSWORD=123456 \ -v ./mysql5/data:/var/lib/mysql \ -v ./mysql5/conf:/etc/mysql/conf.d \ -v ./mysql5/init:/docker-entrypoint-initdb.d \ --restart=always \ --network mybak \ --ip 172.21.0.2 \ mysql:5.7 --lower_case_table_names=1

Redis安装 1 2 3 4 5 6 7 8 9 10 docker run \ --name redis \ -p 6379:6379 \ -v ./redis/conf/redis.conf:/etc/redis/redis.conf \ -v ./redis/data:/data \ --restart=always \ --network mynet \ --ip 172.20.0.3 \ -d redis redis-server /etc/redis/redis.conf \ --requirepass 123456

手动解决配置文件密码不生效

1 docker exec -it redis bash

1 redis-cli config set requirepass 123456

安装nacos 1 2 3 4 5 6 7 8 9 PREFER_HOST_MODE=hostname MODE=standalone SPRING_DATASOURCE_PLATFORM=mysql MYSQL_SERVICE_HOST=mysql MYSQL_SERVICE_DB_NAME=nacos MYSQL_SERVICE_PORT=3306 MYSQL_SERVICE_USER=root MYSQL_SERVICE_PASSWORD=123456 MYSQL_SERVICE_DB_PARAM=characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true&serverTimezone=Asia/Shanghai

1 docker pull nacos/nacos-server:v2.2.2

1 2 3 4 5 6 7 8 9 10 docker run -d \ --name nacos \ --env-file ./nacos/custom.env \ -p 8848:8848 \ -p 9848:9848 \ -p 9849:9849 \ --restart=always \ --network mynet \ --ip 172.20.0.4 \ nacos/nacos-server:v2.2.2

1 vi application.properties

1 2 3 4 5 6 7 8 9 10 11 12 ### Open the authentication system:: nacos.core.auth.system.type=nacos nacos.core.auth.enabled=true ### JWT令牌的密钥建议自行生成更换 nacos.core.auth.plugin.nacos.token.secret.key=SecretKey012345678901234567890123456789012345678901234567890123456789 ### Base64编码的字符串 nacos.core.auth.plugin.nacos.token.secret.key=VGhpc0lzTXlDdXN0b21TZWNyZXRLZXkwMTIzNDU2Nzg= nacos.core.auth.server.identity.key=example nacos.core.auth.server.identity.value=example

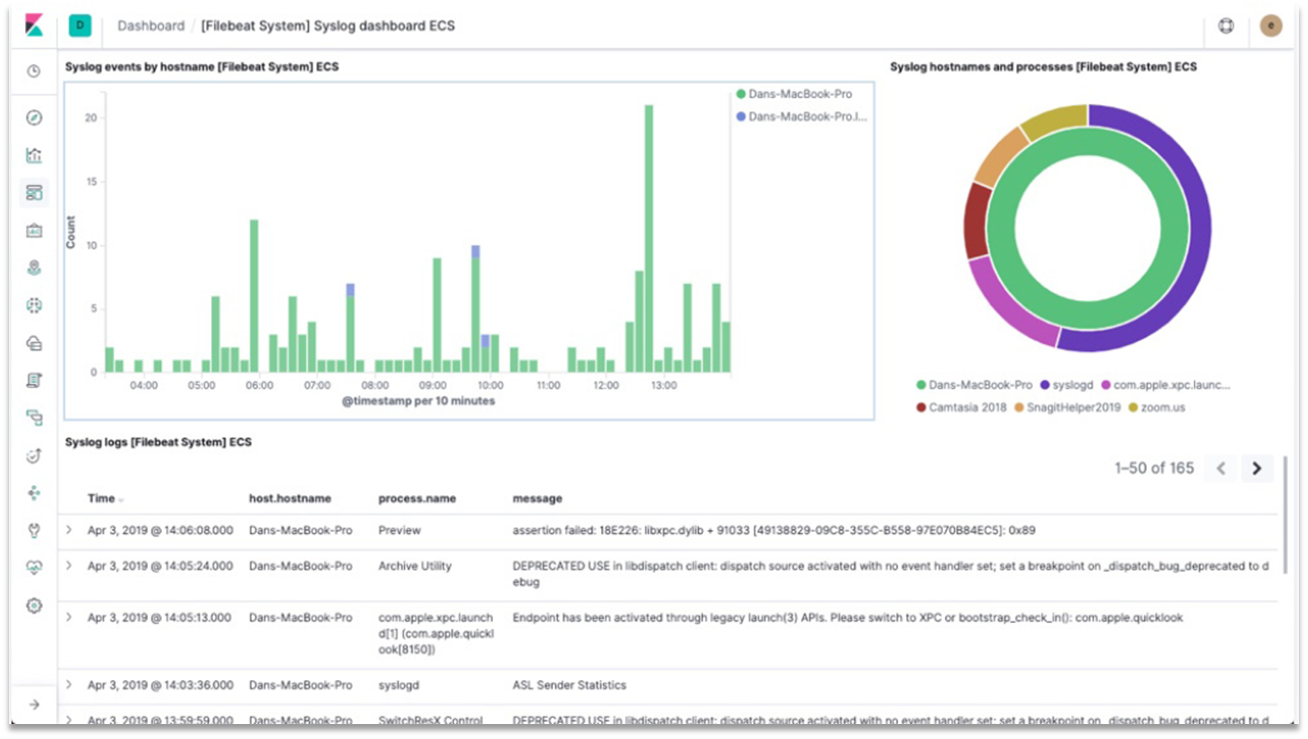

elastic技术栈 Elasticsearch是由elastic公司开发的一套搜索引擎技术,它是elastic技术栈中的一部分。完整的技术栈包括:

Elasticsearch:用于数据存储、计算和搜索

Logstash/Beats:用于数据收集

Kibana:用于数据可视化

整套技术栈被称为ELK,经常用来做日志收集、系统监控和状态分析等等:

整套技术栈的核心就是用来存储 、搜索 、计算 的Elasticsearch,因此我们接下来学习的核心也是Elasticsearch。

我们要安装的内容包含2部分:

elasticsearch:存储、搜索和运算

kibana:图形化展示

首先Elasticsearch不用多说,是提供核心的数据存储、搜索、分析功能的。

然后是Kibana,Elasticsearch对外提供的是Restful风格的API,任何操作都可以通过发送http请求来完成。不过http请求的方式、路径、还有请求参数的格式都有严格的规范。这些规范我们肯定记不住,因此我们要借助于Kibana这个服务。

Kibana是elastic公司提供的用于操作Elasticsearch的可视化控制台。它的功能非常强大,包括:

对Elasticsearch数据的搜索、展示

对Elasticsearch数据的统计、聚合,并形成图形化报表、图形

对Elasticsearch的集群状态监控

它还提供了一个开发控制台(DevTools),在其中对Elasticsearch的Restful的API接口提供了语法提示

安装Elasticsearch 1 docker load -i elasticsearch.tar

1 docker pull elasticsearch:7.17.16

1 2 3 4 5 6 7 8 9 10 11 12 13 docker run -d \ --name elasticsearch \ -e "ES_JAVA_OPTS=-Xms512m -Xmx512m" \ -e "discovery.type=single-node" \ -v es-data:/usr/share/elasticsearch/data \ -v es-plugins:/usr/share/elasticsearch/plugins \ --privileged \ --restart=always \ --network mynet \ --ip 172.20.0.5 \ -p 9200:9200 \ -p 9300:9300 \ elasticsearch:7.17.16

为ElasticSearch设置用户名和密码 1 sudo docker exec -it elasticsearch bash

1 cd /usr/share/elasticsearch/config

1 2 3 echo "xpack.security.enabled: true xpack.license.self_generated.type: basic xpack.security.transport.ssl.enabled: true" >> elasticsearch.yml

1 sudo docker restart elasticsearch

1 sudo docker exec -it elasticsearch bash

1 ./bin/elasticsearch-setup-passwords interactive

root@f6b93f64adc4:/usr/share/elasticsearch# ./bin/elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]

如果确定开启密码的话,需要设置以下六个账户的密码(建议设置成一样的)

输入 y,接着分别为六个用户设置密码

1 sudo docker restart elasticsearch

安装Kibana 1 docker load -i kibana.tar

1 docker pull kibana:7.17.16

1 2 3 4 5 6 7 8 docker run -d \ --name kibana \ -e ELASTICSEARCH_HOSTS=http://elasticsearch:9200 \ --restart=always \ --network mynet \ --ip 172.20.0.6 \ -p 5601:5601 \ kibana:7.17.16

在 kibana 容器中指定访问 ElasticSearch 的用户名和密码 1 sudo docker exec -it kibana bash

1 2 echo -e '\nelasticsearch.username: "elastic"' >> /usr/share/kibana/config/kibana.yml echo -e 'elasticsearch.password: "123456"\n' >> /usr/share/kibana/config/kibana.yml

1 sudo docker restart kibana

安装IK分词器 方案一 :在线安装

1 docker exec -it es ./bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.12.1/elasticsearch-analysis-ik-7.12.1.zip

1 docker restart elasticsearch

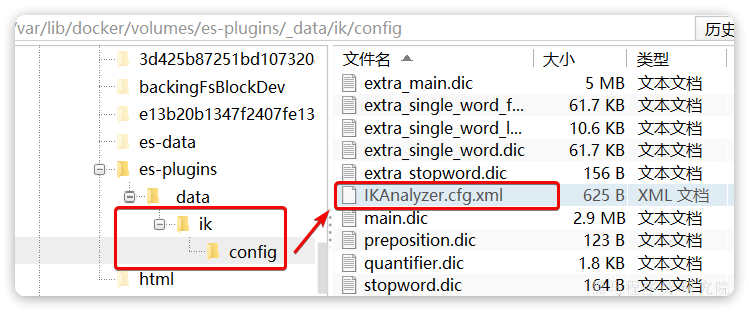

方案二 :离线安装

1 docker volume inspect es-plugins

结果如下:

[

{

“CreatedAt”: “2024-11-06T10:06:34+08:00”,

“Driver”: “local”,

“Labels”: null,

“Mountpoint”: “/var/lib/docker/volumes/es-plugins/_data”,

“Name”: “es-plugins”,

“Options”: null,

“Scope”: “local”

}

]

1 cd /var/lib/docker/volumes/es-plugins/_data

1 docker restart elasticsearch

使用IK分词器 IK分词器包含两种模式:

<font style="background-color:rgb(187,191,196);">ik_smart</font>:智能语义切分 <font style="background-color:rgb(187,191,196);">ik_max_word</font>:最细粒度切分

我们在Kibana的DevTools上来测试分词器,首先测试Elasticsearch官方提供的标准分词器:

1 2 3 4 5 POST /_analyze { "analyzer": "standard", "text": "黑马程序员学习java太棒了" }

结果如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 { "tokens" : [ { "token" : "黑", "start_offset" : 0, "end_offset" : 1, "type" : "<IDEOGRAPHIC>", "position" : 0 }, { "token" : "马", "start_offset" : 1, "end_offset" : 2, "type" : "<IDEOGRAPHIC>", "position" : 1 }, { "token" : "程", "start_offset" : 2, "end_offset" : 3, "type" : "<IDEOGRAPHIC>", "position" : 2 }, { "token" : "序", "start_offset" : 3, "end_offset" : 4, "type" : "<IDEOGRAPHIC>", "position" : 3 }, { "token" : "员", "start_offset" : 4, "end_offset" : 5, "type" : "<IDEOGRAPHIC>", "position" : 4 }, { "token" : "学", "start_offset" : 5, "end_offset" : 6, "type" : "<IDEOGRAPHIC>", "position" : 5 }, { "token" : "习", "start_offset" : 6, "end_offset" : 7, "type" : "<IDEOGRAPHIC>", "position" : 6 }, { "token" : "java", "start_offset" : 7, "end_offset" : 11, "type" : "<ALPHANUM>", "position" : 7 }, { "token" : "太", "start_offset" : 11, "end_offset" : 12, "type" : "<IDEOGRAPHIC>", "position" : 8 }, { "token" : "棒", "start_offset" : 12, "end_offset" : 13, "type" : "<IDEOGRAPHIC>", "position" : 9 }, { "token" : "了", "start_offset" : 13, "end_offset" : 14, "type" : "<IDEOGRAPHIC>", "position" : 10 } ] }

可以看到,标准分词器智能1字1词条,无法正确对中文做分词。

我们再测试IK分词器:

1 2 3 4 5 POST /_analyze { "analyzer": "ik_smart", "text": "黑马程序员学习java太棒了" }

执行结果如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 { "tokens" : [ { "token" : "黑马", "start_offset" : 0, "end_offset" : 2, "type" : "CN_WORD", "position" : 0 }, { "token" : "程序员", "start_offset" : 2, "end_offset" : 5, "type" : "CN_WORD", "position" : 1 }, { "token" : "学习", "start_offset" : 5, "end_offset" : 7, "type" : "CN_WORD", "position" : 2 }, { "token" : "java", "start_offset" : 7, "end_offset" : 11, "type" : "ENGLISH", "position" : 3 }, { "token" : "太棒了", "start_offset" : 11, "end_offset" : 14, "type" : "CN_WORD", "position" : 4 } ] }

拓展词典 随着互联网的发展,“造词运动”也越发的频繁。出现了很多新的词语,在原有的词汇列表中并不存在。比如:“泰裤辣”,“传智播客” 等。

IK分词器无法对这些词汇分词,测试一下:

1 2 3 4 5 POST /_analyze { "analyzer": "ik_max_word", "text": "传智播客开设大学,真的泰裤辣!" }

结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 { "tokens" : [ { "token" : "传", "start_offset" : 0, "end_offset" : 1, "type" : "CN_CHAR", "position" : 0 }, { "token" : "智", "start_offset" : 1, "end_offset" : 2, "type" : "CN_CHAR", "position" : 1 }, { "token" : "播", "start_offset" : 2, "end_offset" : 3, "type" : "CN_CHAR", "position" : 2 }, { "token" : "客", "start_offset" : 3, "end_offset" : 4, "type" : "CN_CHAR", "position" : 3 }, { "token" : "开设", "start_offset" : 4, "end_offset" : 6, "type" : "CN_WORD", "position" : 4 }, { "token" : "大学", "start_offset" : 6, "end_offset" : 8, "type" : "CN_WORD", "position" : 5 }, { "token" : "真的", "start_offset" : 9, "end_offset" : 11, "type" : "CN_WORD", "position" : 6 }, { "token" : "泰", "start_offset" : 11, "end_offset" : 12, "type" : "CN_CHAR", "position" : 7 }, { "token" : "裤", "start_offset" : 12, "end_offset" : 13, "type" : "CN_CHAR", "position" : 8 }, { "token" : "辣", "start_offset" : 13, "end_offset" : 14, "type" : "CN_CHAR", "position" : 9 } ] }

可以看到,<font style="background-color:rgb(187,191,196);">传智播客</font>和<font style="background-color:rgb(187,191,196);">泰裤辣</font>都无法正确分词。

所以要想正确分词,IK分词器的词库也需要不断的更新,IK分词器提供了扩展词汇的功能。

1)打开IK分词器config目录:

注意,如果采用在线安装的通过,默认是没有config目录的,需要把课前资料提供的ik下的config上传至对应目录。

2)在IKAnalyzer.cfg.xml配置文件内容添加:

1 2 3 4 5 6 7 <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd"> <properties> <comment>IK Analyzer 扩展配置</comment> <!--用户可以在这里配置自己的扩展字典 *** 添加扩展词典--> <entry key="ext_dict">ext.dic</entry> </properties>

3)在IK分词器的config目录新建一个 <font style="background-color:rgb(187,191,196);">ext.dic</font>,可以参考config目录下复制一个配置文件进行修改

4)重启elasticsearch

1 2 3 4 docker restart elasticsearch # 查看 日志 docker logs -f elasticsearch

再次测试,可以发现<font style="background-color:rgb(187,191,196);">传智播客</font>和<font style="background-color:rgb(187,191,196);">泰裤辣</font>都正确分词了:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 { "tokens" : [ { "token" : "传智播客", "start_offset" : 0, "end_offset" : 4, "type" : "CN_WORD", "position" : 0 }, { "token" : "开设", "start_offset" : 4, "end_offset" : 6, "type" : "CN_WORD", "position" : 1 }, { "token" : "大学", "start_offset" : 6, "end_offset" : 8, "type" : "CN_WORD", "position" : 2 }, { "token" : "真的", "start_offset" : 9, "end_offset" : 11, "type" : "CN_WORD", "position" : 3 }, { "token" : "泰裤辣", "start_offset" : 11, "end_offset" : 14, "type" : "CN_WORD", "position" : 4 } ] }

总结 分词器的作用是什么?

创建倒排索引时,对文档分词

用户搜索时,对输入的内容分词

IK分词器有几种模式?

<font style="background-color:rgb(187,191,196);">ik_smart</font>:智能切分,粗粒度<font style="background-color:rgb(187,191,196);">ik_max_word</font>:最细切分,细粒度

IK分词器如何拓展词条?如何停用词条?

利用config目录的<font style="background-color:rgb(187,191,196);">IkAnalyzer.cfg.xml</font>文件添加拓展词典和停用词典

在词典中添加拓展词条或者停用词条

seata安装与配置 1 docker pull seataio/seata-server:1.5.2

1 2 3 4 5 6 7 8 9 10 11 docker run --name seata \ -p 8099:8099 \ -p 7099:7099 \ -e SEATA_IP=$(hostname -I | awk '{print $1}') \ -v ./seata:/seata-server/resources \ --privileged=true \ --restart=always \ --network mynet \ --ip 172.20.0.7 \ -d \ seataio/seata-server:1.5.2

RabbitMQ安装 1 docker pull rabbitmq:3.8-management

1 docker pull rabbitmq:management

1 2 3 4 5 6 7 8 9 10 11 12 13 docker run \ -e RABBITMQ_DEFAULT_USER=rabbitmq \ -e RABBITMQ_DEFAULT_PASS=123456 \ -v mq-plugins:/plugins \ --name rabbitmq \ --hostname rabbitmq \ -p 15672:15672 \ -p 5672:5672 \ --restart=always \ --network mynet\ --ip 172.20.0.8 \ -d \ rabbitmq:3.8-management

1 2 3 4 5 6 7 8 9 10 11 12 13 docker run \ -e RABBITMQ_DEFAULT_USER=rabbitmq \ -e RABBITMQ_DEFAULT_PASS=123456 \ -v mq-plugins:/plugins \ --name rabbitmq \ --hostname rabbitmq \ -p 15672:15672 \ -p 5672:5672 \ --restart=always \ --network mynet\ --ip 172.20.0.8 \ -d \ rabbitmq:management

1 docker volume inspect mq-plugins

结果如下:

1 2 3 4 5 6 7 8 9 10 11 [ { "CreatedAt": "2024-06-19T09:22:59+08:00", "Driver": "local", "Labels": null, "Mountpoint": "/var/lib/docker/volumes/mq-plugins/_data", "Name": "mq-plugins", "Options": null, "Scope": "local" } ]

1 cd /var/lib/docker/volumes/mq-plugins/_data

1 docker exec -it rabbitmq rabbitmq-plugins enable rabbitmq_delayed_message_exchange

minio安装 1 2 mkdir -p /usr/local/soft/minio/config mkdir -p /usr/local/soft/minio/data

1 2 3 4 5 6 7 8 9 10 11 docker run --name minio \ --net=mynet \ --ip 172.20.0.9 \ -p 9000:9000 -p 9001:9001 \ -d --restart=always \ -e "MINIO_ROOT_USER=minio" \ -e "MINIO_ROOT_PASSWORD=minio_123456" \ -v /usr/local/soft/minio/data:/data \ -v /usr/local/soft/minio/config:/root/.minio \ minio/minio server \ /data --console-address ":9001" --address ":9000"

RocketMQ安装 1 docker pull apache/rocketmq:5.3.0

1 2 3 4 5 6 7 8 9 10 11 12 sudo docker run -d \ --privileged=true \ --restart=always \ --name rmqnamesrv \ --network mynet \ --ip 172.20.0.10 \ -p 9876:9876 \ -v /usr/local/soft/rocketmq/nameserver/logs:/home/rocketmq/logs \ -v /usr/local/soft/rocketmq/nameserver/bin/runserver.sh:/home/rocketmq/rocketmq-5.3.0/bin/runserver.sh \ -e "MAX_HEAP_SIZE=256M" \ -e "HEAP_NEWSIZE=128M" \ apache/rocketmq:5.3.0 sh mqnamesrv

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 docker run -d \ --restart=always \ --name rmqbroker \ --network mynet \ --ip 172.20.0.11 \ -p 10911:10911 -p 10909:10909 \ --privileged=true \ -v /usr/local/soft/rocketmq/broker/logs:/root/logs \ -v /usr/local/soft/rocketmq/broker/store:/root/store \ -v /usr/local/soft/rocketmq/broker/conf/broker.conf:/home/rocketmq/broker.conf \ -v /usr/local/soft/rocketmq/broker/bin/runbroker.sh:/home/rocketmq/rocketmq-5.3.0/bin/runbroker.sh \ -e "MAX_HEAP_SIZE=512M" \ -e "HEAP_NEWSIZE=256M" \ apache/rocketmq:5.3.0 \ sh mqbroker -c /home/rocketmq/broker.conf

1 docker pull apacherocketmq/rocketmq-dashboard:latest

1 2 3 4 5 6 7 8 docker run -d \ --restart=always \ --name rmqdashboard \ --network mynet \ --ip 172.20.0.12 \ -e "JAVA_OPTS=-Xmx256M -Xms256M -Xmn128M -Drocketmq.namesrv.addr=rmqnamesrv:9876 -Dcom.rocketmq.sendMessageWithVIPChannel=false" \ -p 8180:8080 \ apacherocketmq/rocketmq-dashboard

Java应用安装 jar包、Dockerfile文件,放到同一个目录上传

1 2 3 4 5 6 7 8 9 FROM adoptopenjdk/openjdk8:jre8u-nightlyENV TZ=Asia/ShanghaiRUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone COPY blink_backend.jar /app.jar ENTRYPOINT ["java" , "-jar" , "/app.jar" ]

1 docker build -t 镜像名_backend .

1 docker run -d --name 容器名-backend -p 7070:7070 镜像名_backend --network mynet

nginx应用安装 1 2 3 4 5 6 7 8 docker run -d \ --name 容器名-nginx \ -p 8787:8787 \ -p 8788:8788 \ --network mynet \ -v /usr/local/soft/容器名_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/容器名_nginx/nginx.conf:/etc/nginx/nginx.conf \ nginx

部署 1 2 3 4 5 6 7 8 9 cd /usr/local/soft/shopping/shopping_backend/ docker build -t shopping_backend . docker run -d \ --name shopping-backend \ --network mynet \ --ip 172.20.0.13 \ -p 808:808 \ --restart=always \ shopping_backend

1 2 3 4 5 6 7 8 9 10 11 docker pull nginx docker run -d \ --name shopping-nginx \ --network mynet \ --ip 172.20.0.14 \ -p 1808:1808 \ -p 2808:2808 \ -v /usr/local/soft/shopping/shopping_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/shopping/shopping_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx

1 2 3 4 5 6 7 8 9 cd /usr/local/soft/sky/sky_backend/ docker build -t sky_backend . docker run -d \ --name sky-backend \ --network mynet \ --ip 172.20.0.15 \ -p 8081:8081 \ --restart=always \ sky_backend

1 2 3 4 5 6 7 8 9 docker run -d \ --name sky-nginx \ --network mynet \ --ip 172.20.0.16 \ -p 81:81 \ -v /usr/local/soft/sky/sky_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/sky/sky_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx

1 2 3 4 5 6 7 8 9 cd /usr/local/soft/xk/xk_backend/ docker build -t xk_backend . docker run -d \ --name xk-backend \ --network mynet \ --ip 172.20.0.17 \ -p 8082:8082 \ --restart=always \ xk_backend

1 2 3 4 5 6 7 8 9 docker run -d \ --name xk-nginx \ --network mynet \ --ip 172.20.0.18 \ -p 82:82 \ -v /usr/local/soft/xk/xk_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/xk/xk_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx

1 2 3 4 5 6 7 8 9 cd /usr/local/soft/blink/blink_backend/ docker build -t blink_backend . docker run -d \ --name blink-backend \ --network mynet \ --ip 172.20.0.19 \ -p 7070:7070 \ --restart=always \ blink_backend

1 2 3 4 5 6 7 8 9 10 docker run -d \ --name blink-nginx \ --network mynet \ --ip 172.20.0.20 \ -p 8787:8787 \ -p 8788:8788 \ -v /usr/local/soft/blink/blink_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/blink/blink_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx

1 2 3 4 5 6 7 8 9 cd /usr/local/soft/chat/chat_backend/ docker build -t chat_master . docker run -d \ --name chat-master \ --network mynet \ --ip 172.20.0.21 \ -p 8088:8088 \ --restart=always \ chat_master

1 2 3 4 5 6 7 8 9 10 docker run -d \ --name chat-nginx \ --network mynet \ --ip 172.20.0.22 \ -p 810:810 \ -p 820:820 \ -v /usr/local/soft/chat/chat_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/chat/chat_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx

1 2 3 4 5 6 7 8 9 cd /usr/local/soft/blog/blog_backend/ docker build -t blog_backend . docker run -d \ --name blog-backend \ --network mynet \ --ip 172.20.0.23 \ -p 8800:8800 \ --restart=always \ blog_backend

1 2 3 4 5 6 7 8 9 10 docker run -d \ --name blog-nginx \ --network mynet \ --ip 172.20.0.24 \ -p 380:380 \ -p 390:390 \ --restart=always \ -v /usr/local/soft/blog/blog_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/blog/blog_nginx/nginx.conf:/etc/nginx/nginx.conf \ nginx

1 2 3 4 5 6 7 8 9 cd /usr/local/soft/source/source_backend/ docker build -t source-admin . docker run -d \ --name source-admin \ --network mynet \ --ip 172.20.0.25 \ -p 8087:8087 \ --restart=always \ source-admin

1 2 3 4 5 6 7 8 9 docker run -d \ --name source-nginx \ --network mynet \ --ip 172.20.0.26 \ -p 2828:2828 \ -v /usr/local/soft/source/source_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/source/source_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 cd /usr/local/soft/im/im-platform/ docker build -t im_platform . docker run -d \ --name im-platform \ --network mynet \ --ip 172.20.0.27 \ -p 8888:8888 \ --restart=always \ im_platform cd /usr/local/soft/im/im-server/ docker build -t im_server . docker run -d \ --name im-server \ --network mynet \ --ip 172.20.0.28 \ -p 8878:8878 \ --restart=always \ im_server cd /usr/local/soft/im/admin_backend/ docker build -t im-admin . docker run -d \ --name im-admin \ --network mynet \ --ip 172.20.0.29 \ -p 8889:8889 \ --restart=always \ im-admin docker run -d \ --name im-nginx \ --network mynet \ --ip 172.20.0.30 \ -p 281:280 \ -v /usr/local/soft/im/web_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/im/web_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx

1 2 3 4 5 6 7 8 9 10 11 12 # 启动 systemctl start nginx.service # 开机启动 systemctl enable nginx.service # 取消开机启动 systemctl disable nginx.service # 停止 systemctl stop nginx.service # 重启 systemctl restart nginx.service # 查看进程 ps -ef | grep nginx

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 cd /usr/local/soft/charge/charge_backend/ docker build -t charge-gateway . docker run -d \ --name charge-gateway \ --network mynet \ --ip 172.20.0.31 \ -p 8080:8080 \ --restart=always \ charge-gateway docker build -t charge-visual-monitor . docker run -d \ --name charge-visual-monitor \ --network mynet \ --ip 172.20.0.32 \ -p 9100:9100 \ --restart=always \ charge-visual-monitor docker build -t charge-modules-gen . docker run -d \ --name charge-modules-gen \ --network mynet \ --ip 172.20.0.33 \ -p 9202:9202 \ --restart=always \ charge-modules-gen docker build -t charge-modules-job . docker run -d \ --name charge-modules-job \ --network mynet \ --ip 172.20.0.34 \ -p 9203:9203 \ --restart=always \ charge-modules-job docker build -t charge-auth . docker run -d \ --name charge-auth \ --network mynet \ --ip 172.20.0.35 \ -p 9200:9200 \ --restart=always \ charge-auth docker build -t charge-modules-system . docker run -d \ --name charge-modules-system \ --network mynet \ --ip 172.20.0.36 \ -p 9201:9201 \ --restart=always \ charge-modules-system docker build -t charge-modules-file . docker run -d \ --name charge-modules-file \ --network mynet \ --ip 172.20.0.37 \ -p 9300:9300 \ --restart=always \ charge-modules-file docker build -t charge-modules-admin . docker run -d \ --name charge-modules-admin \ --network mynet \ --ip 172.20.0.38 \ -p 9204:9204 \ --restart=always \ charge-modules-admin docker run -d \ --name charge-nginx \ --network mynet \ --ip 172.20.0.39 \ -p 800:800 \ -v /usr/local/soft/charge/charge_nginx/html:/usr/share/nginx/html \ -v /usr/local/soft/charge/charge_nginx/nginx.conf:/etc/nginx/nginx.conf \ --restart=always \ nginx